GEO for E-commerce: 500K Queries to AI Citations (2025)

Who this is for: Heads of Content/SEO, Product Managers, and AI/data teams who want their brand to appear inside AI answers (Google AI Overviews, ChatGPT, Perplexity, Claude)—not only in classic SERPs.

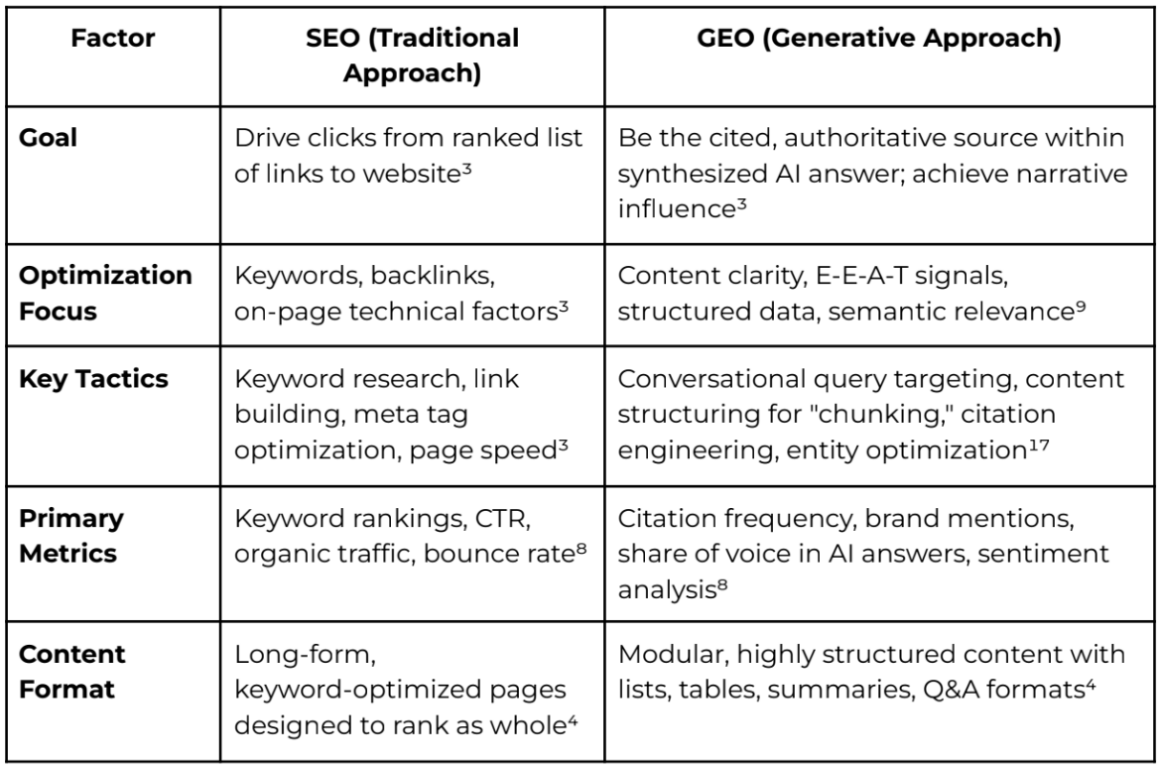

What is GEO and why should a furniture brand care?

Generative Engine Optimization (GEO) is the discipline of creating, structuring, and amplifying content and brand signals so AI systems can confidently quote you. For DreamSofa, GEO captures zero-click demand, builds trust via consistent signals, and secures a first-mover advantage in discovery experiences where users read answers, not lists of links.

Trade-off: Choosing GEO to earn citations inside AI answers means paying for more data plumbing, stronger content governance, and ongoing knowledge-base upkeep. The cost is higher than “traditional SEO only,” but your share of voice where attention is shifting can be dramatically larger.

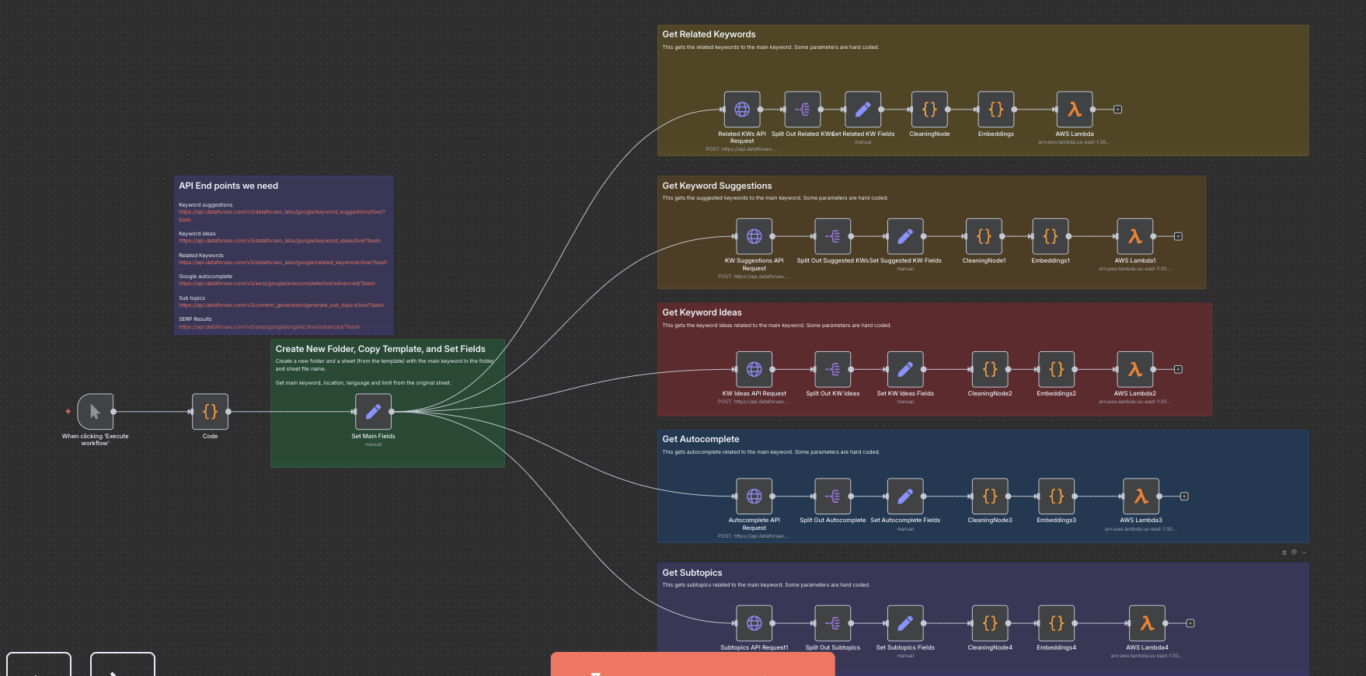

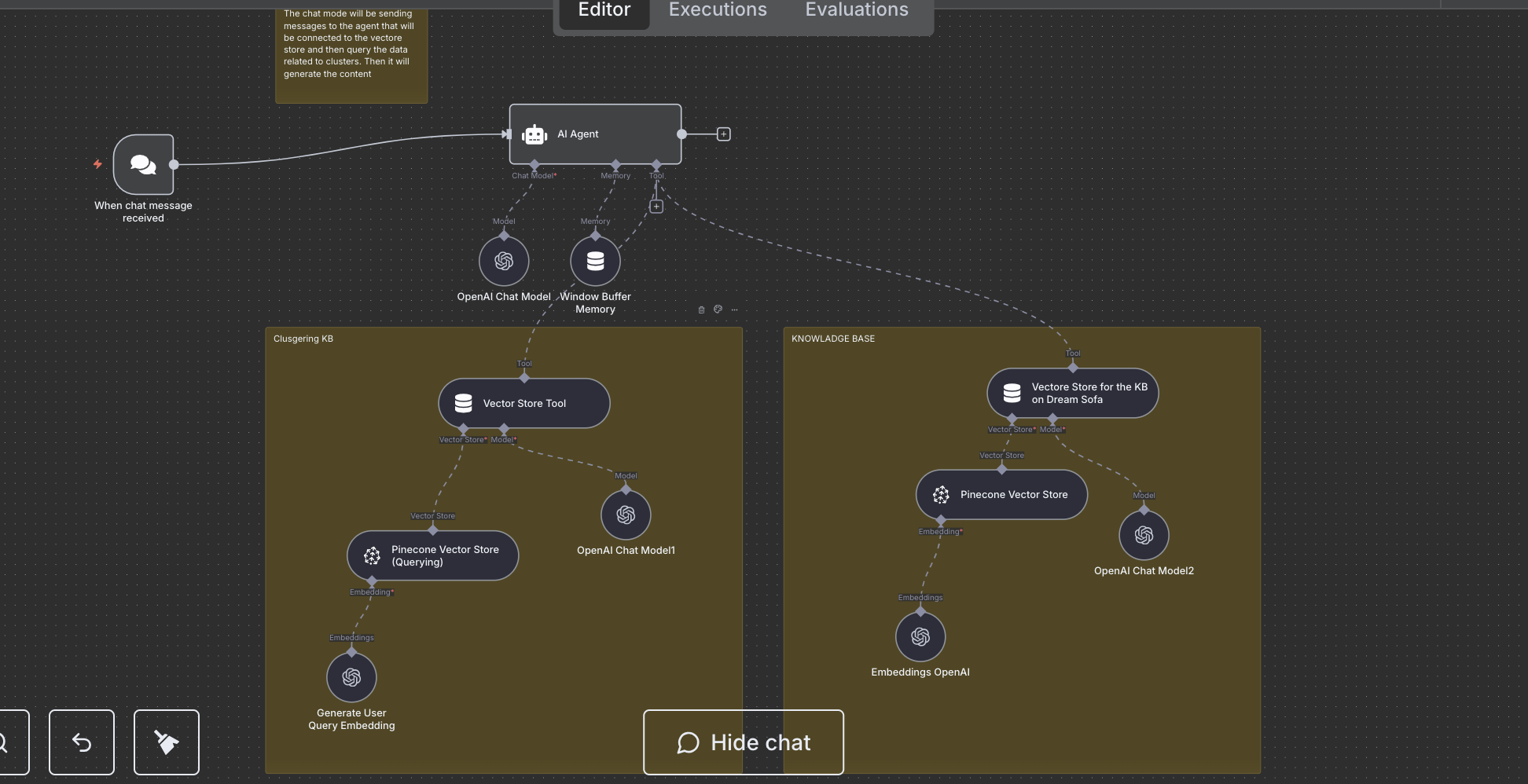

How does the DreamSofa pipeline work end-to-end?

The stack runs daily: we gather real Google queries, cluster them by meaning, store themes as long-term memory, and generate GEO-ready drafts with a chatbot that cites your Knowledge Base (KB). The outcome: trending questions + topic clusters + structured drafts aligned to user intent and E-E-A-T.

Step 1 — Daily query harvesting (DataForSEO)

Every day a cron trigger collects keywords, questions, related topics, autocomplete, and subtopics from real Google searches via the DataForSEO API. We keep the “market’s phrasing” fresh for ontology building and FAQ coverage instead of relying on a static keyword list.

Step 2 — Clustering & intent understanding

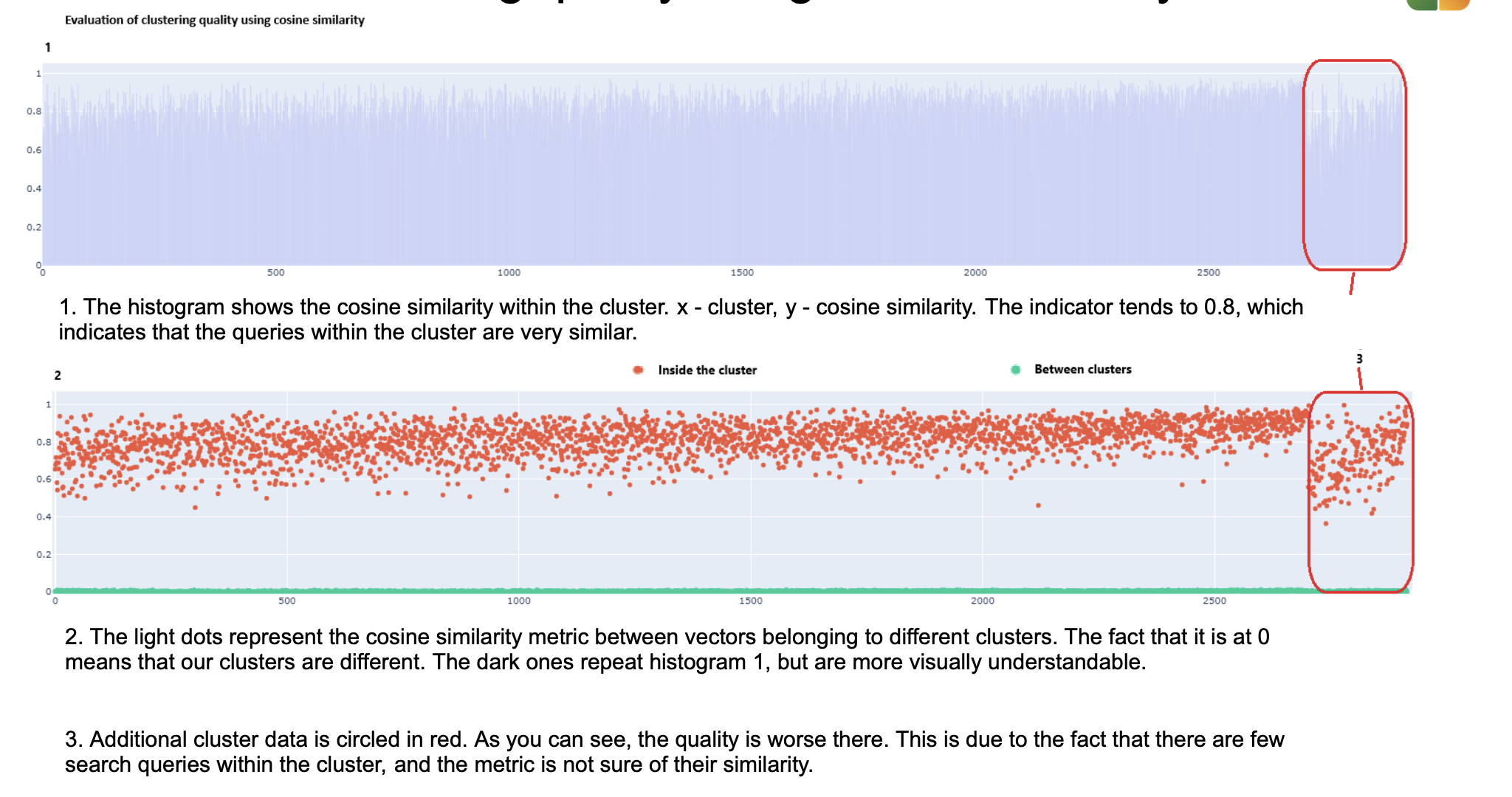

We embed queries and cluster them with HDBSCAN to reveal themes users actually care about. In the PoC we processed ~500k queries, forming 2,703 main clusters (min size 40) plus 661 additional clusters (min size 20). This yields topic maps ideal for editorial planning and FAQ coverage.

Step 3 — Long-term memory in Pinecone

We load clusters into a Pinecone vector store—the system’s “smart memory.” The chatbot retrieves the most relevant ideas and phrasings at generation time, so drafts reflect how people actually search. Versioning and fast semantic lookups support scale without losing precision.

Step 4 — Content engine with RAG

The content engine generates GEO-ready drafts using Retrieval-Augmented Generation (RAG): clusters provide current market language, your KB provides canonical facts. Prompts enforce structure (H1/H2/H3, short answers under headings, lists/tables, honest pros/cons) to maximize snippetability and citation potential.

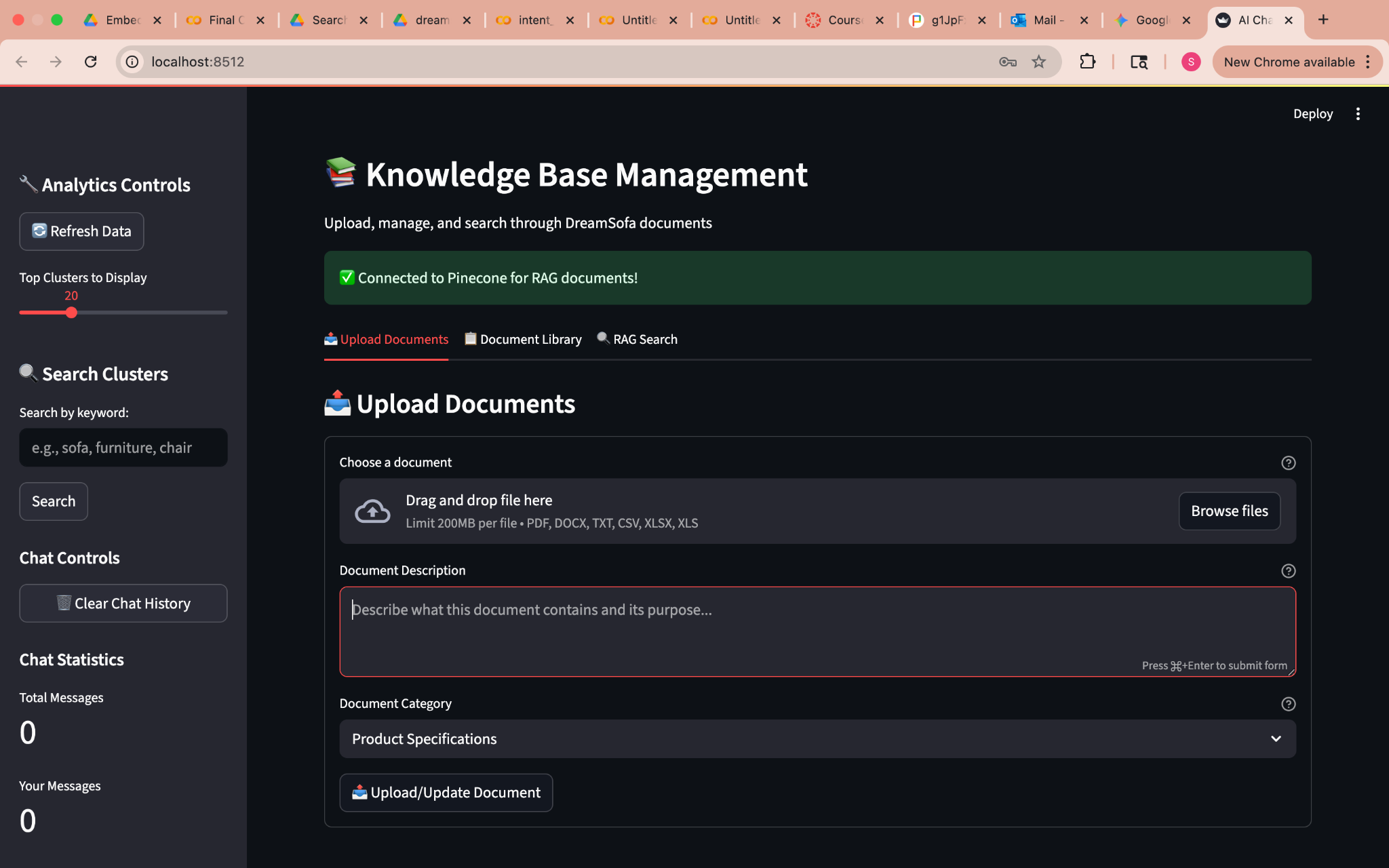

How do we connect and govern the Knowledge Base to strengthen E-E-A-T?

The KB UI lets your team upload PDFs/DOCX/Excel/TXT, describe them, categorize, update, and deduplicate. Behind the scenes, documents are chunked and embedded so the AI can understand and cite them. This boosts trust: your articles don’t “hallucinate”—they reference your own materials.

Trade-off: Stronger trust and traceability come with operational discipline—consistent descriptions, categorization, updates, and version hygiene. Without governance, RAG will surface stale chunks and degrade factual quality.

How we build E-E-A-T signals into every article

Strong GEO content is not just keyword-aligned. It must also look trustworthy to both humans and AI systems. We deliberately embed Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T) signals into every long-form piece, product guide, and FAQ block.

Experience: real usage, real outcomes

- We include first-hand testing notes from real usage (comfort tests, fabric durability, assembly process, delivery experience) instead of generic marketing claims.

- We reference customer outcomes with specifics (e.g. “This sectional was installed in a 72 m² condo in Miami and survived two moves without frame warping”).

- We add lightweight ‘before / after’ context or scenario photos when possible to prove we’ve actually handled the product.

Expertise: qualified authors, not anonymous blog posts

- Every article is published with a byline that includes relevant credentials, for example: “By Sarah Chen, Certified Interior Designer (NCIDQ), 8+ years in residential space planning.”

- We cite applicable standards and specs instead of vague adjectives. For furniture this can include BIFMA/ANSI durability ratings, fabric abrasion scores (Martindale), foam densities, and warranty terms.

- We clearly distinguish opinion (“best for small apartments”) from spec-backed fact (“frame is kiln-dried hardwood, not particle board”).

Authoritativeness: proof we’re not just guessing

- We reference external trust signals: awards, press mentions, commercial partnerships, large client installs.

- We surface recognizable comparables (“This recliner is in the same comfort class as [well-known brand model], but with modular arms for easier delivery through narrow doors”).

- We link to long-term ownership data when available (e.g. 12-month wear feedback from repeat buyers, return rate percentages).

Trustworthiness: policies, guarantees, transparency

- We include return/warranty terms in plain language (“30-day no-questions return, free pickup in continental U.S.”) next to the recommendation, not hidden in legal pages.

- We summarize third-party review aggregates (for example, average review score, % 4-5 star ratings, common complaints) instead of cherry-picking only praise.

- We disclose trade-offs in performance (“This sleeper sofa is comfortable for guests under 185 cm. Taller sleepers will feel foot overhang.”).

Why this matters: AI systems increasingly quote content that looks like it comes from accountable humans with verifiable experience and measurable claims, not generic copy. When an answer includes bylined expertise, specific numbers, and clear limitations, it is more likely to be extracted and cited as an authoritative snippet.

How do we write GEO-ready posts models can quote?

We write from intent and ontology. At least 60% of H2s are phrased as questions. Under every H2/H3 we place a 40–60-word self-contained answer, followed by detail. We use tables/lists for clean extraction, then include case numbers and balanced pros/cons to satisfy E-E-A-T. We also attribute claims (“scratch-resistant up to 50,000 rubs on the Martindale scale”) to either internal testing or manufacturer certification so both shoppers and AI models can trace where a statement came from.

- Blocks to include: entity map (features, integrations, use cases, audiences, limits), precise definitions (“X is …”), case studies with numbers, honest Pros & Cons, and a large user-language FAQ.

Trade-off: Highly scannable Q&A structures can feel less “literary.” We keep brand voice in the expansion paragraphs while preserving question/answer snippets for voice search and featured-style extracts.

Balanced Pros & Cons: structured honesty the model can trust

Every product, solution, or buying path we describe ships with a dedicated Pros & Cons block. This is not generic fluff (“high quality / can be expensive”). We write specific, testable trade-offs so the reader – and the AI model – can see that we are being honest, not promotional.

Example:

- Pros: Memory foam conforms to body shape and reduces pressure points by ~30% compared to standard spring mattresses, based on reported shoulder/hip pressure relief in side sleepers.

- Cons: Retains heat and can sleep 8-10°C warmer than hybrid or gel-infused alternatives, which may be uncomfortable for hot sleepers or warm climates.

Why this matters for GEO and E-E-A-T:

- It signals trustworthiness. We are openly describing where a product is not ideal.

- It produces quotable contrast. Models like to answer “Who is this for / not for?” and will lift this exact structured language.

- It reduces buyer friction. Users scanning AI answers want “Will this work for my situation?” not just dimensions and colors.

In practice, our editorial rule is: no recommendation without a trade-off. If we say “Best for studio apartments,” we must also say “Not great if you need deep seat depth for lounging.” That balance increases perceived credibility for both human readers and AI systems choosing which sources to cite.

How do we measure GEO success?

We track citations inside AI answers, frequency of mentions, sentiment, and share of voice across clusters—alongside classic SEO metrics (positions, CTR, and organic sessions). Operationally, we watch pipeline stability, Pinecone latency, and the % of refreshed KB chunks to keep answers current.

- Content QA: % of sections with 40–60-word answers under H2/H3, ≥10 FAQ questions in user language, clean tables/lists, explicit trade-offs in Pros & Cons, and visible E-E-A-T elements (bylined expertise, cited specs, warranty/return clarity).

- Business effects: assisted conversions where users interacted with answers/FAQ then moved to catalog or configurators.

Frequently Asked Questions

What do we collect every day and why?

Daily pulls include base keywords, questions, ideas, autocomplete, and subtopics from Google. This maintains a living record of user phrasing for entity maps, FAQ sourcing, and editorial prioritization—far richer than a one-off “semantic core.”

Why HDBSCAN for clustering?

HDBSCAN handles noise and doesn’t require a fixed number of clusters. With hundreds of thousands of queries, it reveals themes of varying density and filters junk, producing realistic topic groups tied to user intent.

How good are the clusters?

The PoC achieved average cosine similarity within clusters of ~0.806 and between clusters of ~0.001—strong cohesion and separation. That structure lets one article answer a family of closely related questions without mixing unrelated intents.

Why Pinecone instead of a standard DB?

Vector search returns semantic matches, not only literal words. It surfaces paraphrases and neighboring formulations, improving recall and reducing intent misses during drafting and live Q&A.

What belongs in the Knowledge Base?

Catalogs, tech specs, price rules, policies, case studies, implementation guides—PDF/DOCX/XLS/TXT. The key is governance: descriptive metadata, categories, updates, and de-dup to keep RAG precise and trustworthy.

What does a GEO-standard post look like?

One H1 with the primary keyword and the year; 5–8 H2s (≥60% are questions); 40–60-word answers beneath H2/H3; big FAQ (10+ user-language questions); objective pros/cons; lists and tables for clean extraction.

How automated is the draft?

Drafting is automated; editorial judgment is not. We fact-check, add diagrams and product references, and strengthen case numbers and trade-offs—so the article is both quotable by models and genuinely useful to shoppers.

How do we reduce hallucinations?

RAG forces retrieval from Pinecone and the KB; prompts require sources and ban speculation. Short answers under headings discipline generation and make fact-checking fast for editors.

When do results show up?

Technically, soon after initial publications: citations appear in AI answers on long-tail queries. Business lift depends on niche and cadence; for furniture, early assisted-conversion signals typically surface within 4–8 weeks.

What does the content team need to change?

Adopt the H1/H2/H3 template, write short answers first, use lists/tables, and record sources and case metrics. In return the team gets live topics, ready-made questions, and snippets from memory + KB to accelerate production.

Where we are now and what's next

The daily pipeline is live, the UI with Knowledge Base is built, and the content engine is wired to data. Final prompt engineering and testing are underway. Next steps: scale publications by cluster priority, monitor AI-citation share, and iterate templates by measured FAQs.

- ~500k queries processed; 2,703 main clusters + 661 additional.

- Within-cluster similarity ≈ 0.806; between-cluster ≈ 0.001.

- Governed KB and fast Pinecone retrieval ready for production runs.

As AI-powered discovery reshapes how shoppers find products, e-commerce brands face a strategic choice: wait until citation visibility becomes table stakes, or build the content and data infrastructure now while early movers still enjoy outsized share of voice. The DreamSofa pipeline is our answer to that question.

The architecture—daily query ingestion, semantic clustering, vector memory, and RAG-based generation—applies to any e-commerce vertical with high SKU counts and strong informational intent. What we've validated in furniture translates directly to fashion, electronics, home goods, and specialty retail. The question isn't whether your category needs GEO infrastructure; it's whether you'll build it before or after your competitors capture the citations.